These instructions will let you to create pods consuming accelerated vDPA interfaces on ovn-k secondary network

- OCP cluster running (latest 4.14)

- SRIOV network operator is installed (latest upstream)

- kubernetes-nmstate operator is installed (latest downstream)

- ovn-kubernetes running image is quay.io/rh_ee_lmilleri/ovn-daemonset-f:vhost-vdpa-0906-3

- huge pages are enabled on worker nodes (needed for running dpdk applications)

cat << EOF | oc apply -f -

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfigPool

metadata:

name: mcp-offloading

spec:

machineConfigSelector:

matchExpressions:

- {key: machineconfiguration.openshift.io/role, operator: In, values: [worker,mcp-offloading]}

nodeSelector:

matchLabels:

node-role.kubernetes.io/mcp-offloading: ""

EOF

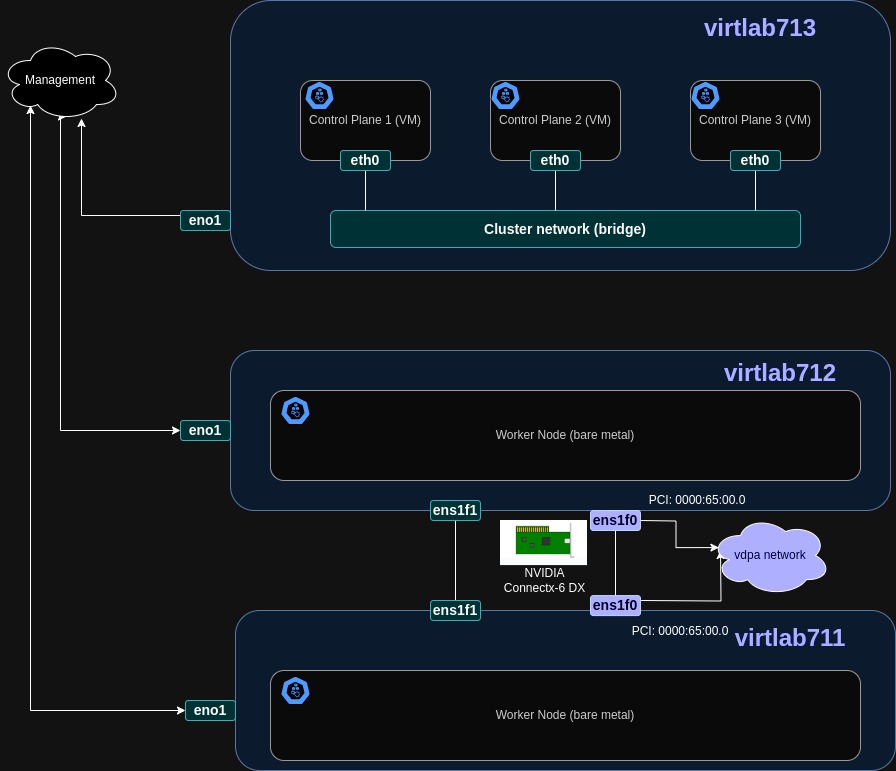

oc label node virtlab712.virt.lab.eng.bos.redhat.com feature.node.kubernetes.io/network-sriov.capable=true

oc label node virtlab712.virt.lab.eng.bos.redhat.com node-role.kubernetes.io/mcp-offloading=""

oc label node virtlab711.virt.lab.eng.bos.redhat.com feature.node.kubernetes.io/network-sriov.capable=true

oc label node virtlab711.virt.lab.eng.bos.redhat.com node-role.kubernetes.io/mcp-offloading=""

cat << EOF | oc apply -f -

apiVersion: sriovnetwork.openshift.io/v1

kind: SriovNetworkPoolConfig

metadata:

name: sriovnetworkpoolconfig-offload

namespace: openshift-sriov-network-operator

spec:

ovsHardwareOffloadConfig:

name: mcp-offloading

EOF

cat << EOF | oc apply -f -

apiVersion: sriovnetwork.openshift.io/v1

kind: SriovNetworkNodePolicy

metadata:

name: policy

namespace: openshift-sriov-network-operator

spec:

nodeSelector:

feature.node.kubernetes.io/network-sriov.capable: "true"

resourceName: mlxnics

priority: 5

numVfs: 1

nicSelector:

deviceID: "101d"

rootDevices:

- 0000:65:00.0

vendor: "15b3"

eSwitchMode: switchdev

deviceType: netdevice

vdpaType: vhost

EOF

cat << EOF | oc apply -f -

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: ovn-kubernetes-a

namespace: vdpa

annotations:

k8s.v1.cni.cncf.io/resourceName: openshift.io/mlxnics

spec:

config: |2

{ "cniVersion": "0.3.1",

"name": "l2-network",

"type": "ovn-k8s-cni-overlay",

"capabilities" : {"CNIDeviceInfoFile": true},

"topology":"localnet",

"subnets": "10.100.200.0/24",

"vlanID": 33,

"mtu": 1300,

"netAttachDefName": "vdpa/ovn-kubernetes-a",

"excludeSubnets": "10.100.200.0/29"

}

EOF

cat << EOF | oc apply -f -

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: vdpa-network

spec:

desiredState:

interfaces:

- name: vdpa-br

description: vdpa ovs bridge

type: ovs-bridge

state: up

bridge:

allow-extra-patch-ports: true

options:

stp: true

port:

- name: ens1f0

ovn:

bridge-mappings:

- bridge: vdpa-br

localnet: l2-network

state: present

ovs-db:

external_ids:

dumb-thing-you-need-todo-because: OCPBUGS-18869

EOF

(Change the name for pod2)

cat << EOF | oc apply -f -

apiVersion: v1

kind: Pod

metadata:

name: vdpa-pod1

namespace: vdpa

annotations:

k8s.v1.cni.cncf.io/networks: vdpa/ovn-kubernetes-a

spec:

containers:

- name: debug-network-pod

image: quay.io/rh_ee_lmilleri/alpine:dpdk

imagePullPolicy: IfNotPresent

securityContext:

runAsUser: 0

capabilities:

add: ["IPC_LOCK","SYS_RESOURCE","NET_RAW"]

volumeMounts:

- mountPath: /dev/hugepages

name: hugepage

resources:

limits:

memory: "8Gi"

cpu: "16"

hugepages-1Gi: "6Gi"

requests:

memory: "8Gi"

cpu: "16"

hugepages-1Gi: "6Gi"

command: ["sleep", "infinity"]

volumes:

- name: hugepage

emptyDir:

medium: HugePages

EOF

Mac addresses and IP addresses are chosen automatically by the system. Let's assume:

pod1:

Mac address: 0a:58:0a:64:c8:0b

IP address: 10.100.200.11

Port representor: eth0

PCI: 0000:65:00.2

Vhost-vdpa: /dev/vhost-vdpa-0

pod2:

Mac address: 0a:58:0a:64:c8:0c

IP address: 10.100.200.12

Port representor: eth0

PCI: 0000:65:00.2

Vhost-vdpa: /dev/vhost-vdpa-0

ulimit -l unlimited

cd dpdk/build/app

./dpdk-testpmd \

--no-pci \

--vdev=net_virtio_user0,path=/dev/vhost-vdpa-0,mac=0a:58:0a:64:c8:0b \

--file-prefix=virtio \

-- --port-topology=chained \

-i --burst=64 --auto-start --forward-mode=txonly --txpkts=64 \

--eth-peer=0,0a:58:0a:64:c8:0c \

--tx-ip=10.100.200.11,10.100.200.12

ulimit -l unlimited

cd dpdk/build/app

./dpdk-testpmd \

--no-pci \

--vdev=net_virtio_user0,path=/dev/vhost-vdpa-0,mac=0a:58:0a:64:c8:0c \

--file-prefix=virtio -- -i --burst=64 --auto-start --forward-mode=rxonly \

--rxpkts=64 --eth-peer=0,0a:58:0a:64:c8:0b --tx-ip=10.100.200.12,10.100.200.11

ovs-appctl dpctl/dump-flows -m type=offloaded | grep ens1f0

ufid:c6306b1c-caa8-4787-bd8c-a090b7aacf7b, skb_priority(0/0),skb_mark(0/0),ct_state(0/0x27),ct_zone(0/0),ct_mark(0/0),ct_label(0/0),recirc_id(0),dp_hash(0/0),in_port(eth0),packet_type(ns=0/0,id=0/0),eth(src=0a:58:0a:64:c8:0b,dst=0a:58:0a:64:c8:0c),eth_type(0x0800),ipv4(src=10.100.200.11,dst=0.0.0.0/0.0.0.0,proto=0/0,tos=0/0,ttl=0/0,frag=no), packets:247445743, bytes:15836516590, used:1.150s, offloaded:yes, dp:tc, actions:push_vlan(vid=33,pcp=0),ens1f0

ovs-appctl dpctl/dump-flows -m type=offloaded | grep ens1f0

ufid:ee7ecbe2-bf67-47bd-9b3a-2b0280790359, skb_priority(0/0),skb_mark(0/0),ct_state(0/0x27),ct_zone(0/0),ct_mark(0/0),ct_label(0/0),recirc_id(0),dp_hash(0/0),in_port(ens1f0),packet_type(ns=0/0,id=0/0),eth(src=0a:58:0a:64:c8:0b,dst=0a:58:0a:64:c8:0c),eth_type(0x8100),vlan(vid=33,pcp=0),encap(eth_type(0x0800),ipv4(src=0.0.0.0/0.0.0.0,dst=10.100.200.12,proto=0/0,tos=0/0,ttl=0/0,frag=no)), packets:78372602, bytes:5329318918, used:0.690s, offloaded:yes, dp:tc, actions:pop_vlan,eth0